i began using console.anthropic.com not as a curiosity, but as a necessity the moment Claude moved from being a demo to becoming infrastructure. In its simplest form, the Console is Anthropic’s operational layer for deploying Claude in production. It is where developers generate keys, watch token usage, select models, test prompts, and collaborate with teams. In the first few minutes inside it, you realize this is not a “developer portal” in the old sense. It is a control plane. It governs cost, performance, safety, scale, and experimentation in one place.

The modern AI developer does not ship models. They ship systems powered by models. That distinction explains why the Console exists and why it matters. Claude’s intelligence is upstream. The Console is downstream where intelligence becomes product. Every real-world application of Claude flows through this interface: chat assistants, coding copilots, moderation pipelines, enterprise knowledge tools, and autonomous agents.

What makes the Anthropic Console notable is not just that it works, but that it encodes a philosophy about AI deployment. It assumes teams will need to monitor spending in real time, switch models mid-workflow, isolate environments, and audit behavior. It assumes AI is not a feature but a core dependency. And it is built accordingly with analytics instead of guesswork, collaboration instead of silos, and graduated model choices instead of a single blunt instrument.

This article explains how the Console works, what the Claude model hierarchy means in practice, how developers choose between intelligence, speed, and cost, and why console.anthropic.com has quietly become one of the most important interfaces in applied artificial intelligence.

The Console as Infrastructure

The Console functions as the operational backbone of Claude’s ecosystem. It is where every deployment decision is made visible and reversible. API keys are not just generated but scoped, rate-limited, isolated by workspace, and revoked when compromised. This creates a security posture aligned with modern cloud practices rather than hobbyist experimentation. – console.anthropic.

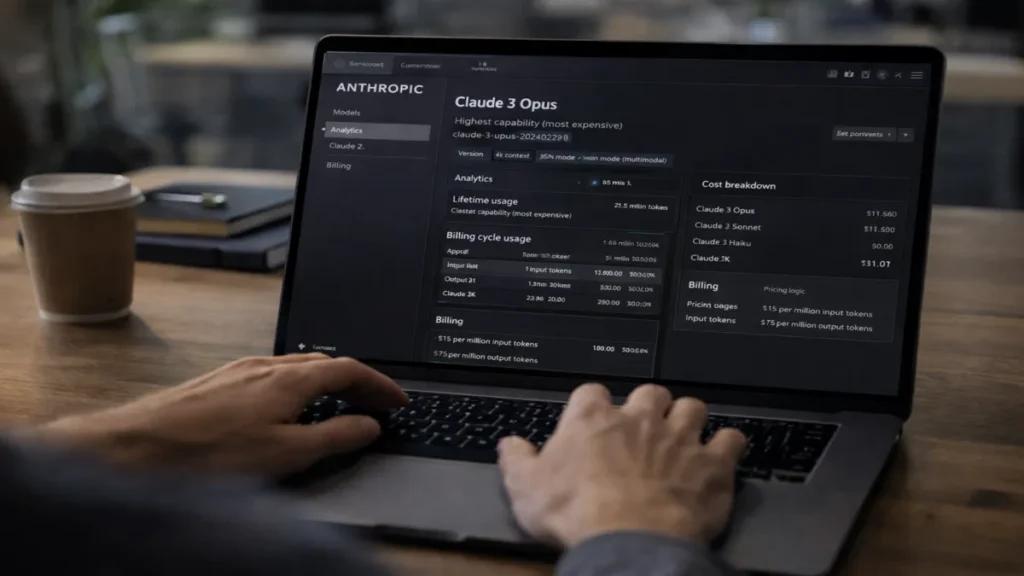

Usage analytics operate continuously, showing token consumption, estimated cost, and rate-limit proximity. This turns AI from an unpredictable cost center into a measurable resource. Developers can see when prompts are inefficient, when models are overpowered for the task, and when usage patterns change unexpectedly.

The Workbench inside the Console allows prompts to be tested directly against live models with different reasoning modes, temperature settings, and formats. This replaces the traditional loop of edit-deploy-observe with a tighter feedback cycle that accelerates both learning and optimization.

The result is that AI development begins to resemble infrastructure engineering rather than model tinkering. The Console becomes the layer where operational discipline is imposed on probabilistic systems.

Read: DACH Region Companies Google Cloud Platform Adoption

Claude’s Model Hierarchy

Anthropic’s Claude models are structured as a capability ladder rather than a single universal model.

Claude Opus 4.5

Claude Opus 4.5 sits at the top. It is designed for frontier reasoning: multi-step logic, ambiguity resolution, complex codebases, and autonomous agents. It is slower and more expensive, but capable of sustained cognitive work over long contexts.

Claude Sonnet 4.5

Claude Sonnet 4.5 is the production default. It delivers most of Opus’s capability at a fraction of the cost and with higher throughput. This makes it suitable for the majority of real-world applications, from coding assistants to enterprise analytics.

Claude Haiku 4.5

Claude Haiku 4.5 is optimized for speed and volume. It trades depth for responsiveness, making it ideal for chatbots, moderation, classification, and near-real-time applications.

Model Comparison

| Model | Strength | Latency | Cost | Use Case |

|---|---|---|---|---|

| Opus 4.5 | Deep reasoning | High | High | Agents, research, complex workflows |

| Sonnet 4.5 | Balanced intelligence | Medium | Medium | Production apps, coding |

| Haiku 4.5 | Speed | Low | Low | Chat, moderation, tagging |

This hierarchy allows teams to architect systems that dynamically select intelligence levels instead of paying for maximum capability everywhere.

Reasoning Modes and Context

Beyond model selection, the Console exposes reasoning modes. Developers can choose standard fast responses or extended reasoning where Claude spends more tokens thinking through multi-step problems. This makes intelligence adjustable, not fixed. – console.anthropic.

Long context support allows entire documents, repositories, or conversations to be processed without truncation. This changes what applications are possible. Knowledge bases no longer need to be chunked aggressively. Code analysis can span entire projects. Agents can retain memory across long sessions.

Context is no longer a constraint. It is a design parameter.

Collaboration and Team Workflows

The Console treats AI development as a team sport. Workspaces allow multiple developers to share keys, prompts, and settings. Prompt templates become shared assets. Production-ready API calls can be exported and versioned alongside application code.

This institutionalizes what was previously ad-hoc knowledge. Instead of one developer knowing “the good prompt,” the prompt becomes a documented artifact. Instead of tribal knowledge, teams gain operational memory.

It also enables governance. Access controls define who can deploy, who can modify prompts, and who can view billing. This aligns AI with enterprise compliance and audit requirements. – console.anthropic.

Why This Matters Strategically

The Anthropic Console is not just a tool. It is a signal about how AI is being normalized inside organizations. It reflects a transition from novelty to dependency.

Companies no longer ask “Can we use AI?” They ask “How do we run AI safely, cheaply, reliably, and at scale?” The Console answers that question by providing visibility, control, and reversibility.

Expert Perspectives

“AI isn’t experimental anymore. It’s operational. And operational systems require dashboards, controls, and accountability,” says a senior platform engineer at a fintech firm.

“The real innovation isn’t just the model. It’s the infrastructure that lets thousands of developers use that model without breaking things,” notes an AI systems architect at a cloud provider.

“What Anthropic has built is not just access to Claude. It’s the layer that makes Claude usable by organizations,” says a product manager at an enterprise SaaS company.

Takeaways

- The Console is the operational layer for deploying Claude, not just a settings page.

- Claude’s tiered model hierarchy enables cost-aware system design.

- Reasoning depth and context length are now configurable parameters.

- Collaboration features turn prompts into shared organizational assets.

- Usage analytics convert AI from a black box into a measurable resource.

- Governance and access control reflect enterprise readiness.

Conclusion

The importance of console.anthropic.com lies not in its interface but in what it represents. It represents the moment when artificial intelligence stopped being a laboratory curiosity and became infrastructure. It shows how intelligence becomes programmable, governable, and scalable.

By making cost visible, intelligence selectable, and behavior testable, the Console turns Claude into a system component rather than a magic box. That shift changes how companies build, how engineers think, and how AI integrates into daily operations.

The future of AI will not be defined only by who builds the smartest model. It will be defined by who builds the most usable intelligence. In that sense, the Anthropic Console is not just a dashboard. It is the bridge between machine cognition and human systems.

FAQs

What is console.anthropic.com?

It is Anthropic’s central platform for managing Claude API usage, models, prompts, billing, and team collaboration.

Which Claude model should most developers start with?

Sonnet 4.5 is the recommended default because it balances intelligence, speed, and cost for most production workloads.

When should Opus 4.5 be used?

For complex reasoning, multi-step workflows, large codebases, or autonomous agent systems that require deep analysis.

What is Haiku best for?

High-volume, low-latency tasks such as chatbots, tagging, moderation, and classification.

Why does the Console matter?

It makes AI operational by providing visibility, control, governance, and collaboration — turning models into usable infrastructure.