I first understood what OpenAI was trying to do with ChatGPT Health when I stopped thinking of it as a medical tool and started thinking of it as a translation layer. Most people already have access to more health data than they can interpret: lab results written in clinical shorthand, activity charts buried in phone apps, nutrition logs that pile up without meaning. ChatGPT Health, launched in early January 2026, is designed to sit between people and that data, helping them understand it without pretending to replace doctors.

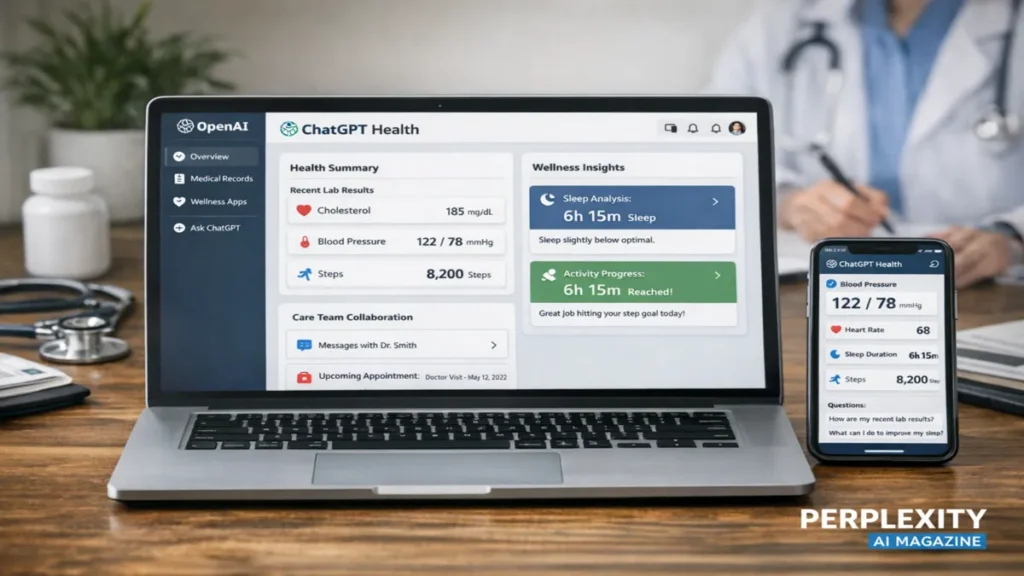

The feature lives inside ChatGPT but exists as its own separate space, with its own memory, its own files, and its own integrations. Users can choose to connect medical records and wellness apps like Apple Health, MyFitnessPal, Function, Peloton, Weight Watchers, AllTrails, and Instacart. Once connected, the system can answer health questions in the context of the user’s own information rather than generic averages.

At the same time, ChatGPT Health is built around explicit limits. It does not diagnose disease. It does not recommend treatments. It does not quietly absorb health data into its training. Instead, it focuses on explaining, summarizing, and helping users prepare for real medical conversations.

This design reflects a broader shift in digital health. The question is no longer whether people will use AI to think about their health, but how that use can be made safer, clearer, and more accountable. ChatGPT Health is OpenAI’s answer to that question, at least in its first form.

Why OpenAI built ChatGPT Health

For years, people have been asking general-purpose chatbots deeply personal health questions. They ask what their blood test means, whether their symptoms are normal, how to eat better, how to exercise safely, how to understand a diagnosis they just received.

OpenAI observed that this behavior was already happening, but in an unstructured way. Health questions were mixed with casual conversation. Sensitive data appeared in ordinary chats. Context was fragmented. Safety boundaries were blurry.

ChatGPT Health emerged as a response to that reality. Instead of discouraging health use entirely, OpenAI chose to design a space that could contain it more responsibly. That meant separating health conversations from everything else, adding stronger privacy protections, and building in clear boundaries about what the system should and should not do.

More than 260 physicians across dozens of countries were consulted during development. Their input shaped both the feature set and its constraints. The goal was not to create an AI doctor, but an AI assistant that could help people be better patients.

In that sense, ChatGPT Health is less about automation and more about orientation. It helps users orient themselves inside their own data and inside the healthcare system they already depend on.

Read: Stanford AI Predicts 103+ Diseases From One Night of Sleep

How ChatGPT Health is structured

ChatGPT Health appears as a dedicated section inside the ChatGPT interface. It is not just a label. It is a separate environment.

Health chats have their own history and memory. Files uploaded there do not appear elsewhere. Data connected there is not visible outside that space. This separation is not cosmetic. It is a core design choice intended to reduce accidental exposure and misuse.

When a user connects an app or a medical record source, they do so deliberately, with explicit consent. Each integration can be turned on or off independently. Users can revoke access at any time.

The system does not automatically pull in historical data unless the integration is designed to do so. In some cases, such as Peloton and AllTrails, the connection focuses on real-time recommendations rather than full history. This reflects a cautious approach that favors privacy over maximal personalization.

Once data is connected, ChatGPT Health can reference it when answering questions. A question about fatigue might be answered in light of recent sleep patterns. A question about cholesterol might be answered in light of a lab report. A question about exercise might be answered in light of activity trends.

But the system avoids making claims that would normally require a clinician. It does not say what a diagnosis is. It does not say what medication to take. It stays in the domain of explanation, pattern recognition, and preparation.

What data can be connected

ChatGPT Health supports a mix of clinical and consumer data sources.

Medical records, where available, include lab results, diagnoses, medications, and visit summaries. These are typically connected through health data intermediaries.

Wellness and lifestyle apps add context around daily behavior. Apple Health provides movement, sleep, and heart metrics. MyFitnessPal provides nutrition logs. Function provides lab-based wellness data. Weight Watchers provides diet planning context. Peloton and AllTrails provide activity suggestions. Instacart bridges planning and action by turning meal plans into grocery lists.

These sources reflect a philosophy that health is not only clinical. It is behavioral, environmental, and habitual. By combining these layers, ChatGPT Health aims to help users see connections that are otherwise scattered across different platforms.

Table: Data sources and roles

| Source | Type | Role |

|---|---|---|

| Medical records | Clinical | Context for lab results and diagnoses |

| Apple Health | Behavioral | Activity, sleep, heart trends |

| MyFitnessPal | Nutritional | Diet patterns and intake |

| Function | Biomarker | Lab-based wellness interpretation |

| Weight Watchers | Dietary | Structured meal planning |

| Peloton | Fitness | Exercise recommendations |

| AllTrails | Outdoor | Nature and movement suggestions |

| Instacart | Logistics | Grocery execution |

Personalization with limits

Personalization is the core promise of ChatGPT Health, but it is deliberately constrained.

The system personalizes by referencing context, not by forming judgments. It might say that a user’s activity level has been lower than usual, but not that they are unhealthy. It might explain that a lab value is above a typical range, but not that it indicates disease.

This distinction matters. It keeps responsibility where it belongs, with clinicians and users themselves. It also protects against the false authority that AI systems can inadvertently project.

One physician involved in development described it as “a map, not a verdict.” Another called it “a lens, not a diagnosis.”

Privacy and trust

Privacy is the defining tension in digital health. Personalization requires data. Trust requires restraint.

ChatGPT Health attempts to navigate this by making privacy visible rather than invisible. Users see what is connected. They see where their data is stored. They see that it is isolated from other conversations. They are told that it is not used to train models.

This does not eliminate all risk. No digital system is risk-free. But it represents a shift away from silent data extraction toward explicit data relationships.

It also reflects a growing recognition that trust is not built through promises alone, but through structure.

Table: Design tradeoffs

| Goal | Approach | Tradeoff |

|---|---|---|

| Personalization | Data integration | Requires user consent |

| Privacy | Isolation and encryption | Limits cross-use |

| Safety | No diagnosis or treatment | Reduces perceived power |

| Trust | Transparency | Slower feature expansion |

Expert perspectives

A digital health researcher described ChatGPT Health as “an interface problem more than a medical problem.” The challenge, she said, is helping people understand what they already have.

A clinician noted that patients often arrive overwhelmed by information. “If this helps them ask better questions,” he said, “that alone is valuable.”

A privacy scholar warned that even well-intentioned systems can drift. “The design has to be defended continuously, not just at launch.”

Takeaways

- ChatGPT Health is a separate, secure health space inside ChatGPT

- Users can connect medical records and wellness apps voluntarily

- The system explains and summarizes but does not diagnose or treat

- Data is isolated and not used for training

- Personalization is context-based, not judgment-based

- Privacy and trust are central to its design

- It reflects a shift toward AI as a health companion, not authority

Conclusion

ChatGPT Health does not promise to make people healthier. It promises to make health easier to understand.

That is a modest claim, and a powerful one. Healthcare is often not limited by knowledge, but by comprehension. Information exists, but it is fragmented, technical, and intimidating. By translating that information into something readable and contextual, ChatGPT Health addresses a real human problem.

Its success will not be measured by how many conditions it can name, but by whether people feel more prepared, less confused, and more engaged with their own care.

If it stays within its limits, respects its users, and remains transparent about what it is and is not, it could become something rare in digital health: a tool that adds clarity without taking control.

FAQs

What is ChatGPT Health

A dedicated space in ChatGPT for connecting and understanding personal health data.

Can it diagnose illness

No, it only provides explanations and context.

Is my data used for training

No, health data is isolated and excluded from training.

Can I disconnect apps later

Yes, integrations can be revoked at any time.

Is it available everywhere

It is rolling out gradually by region and platform.