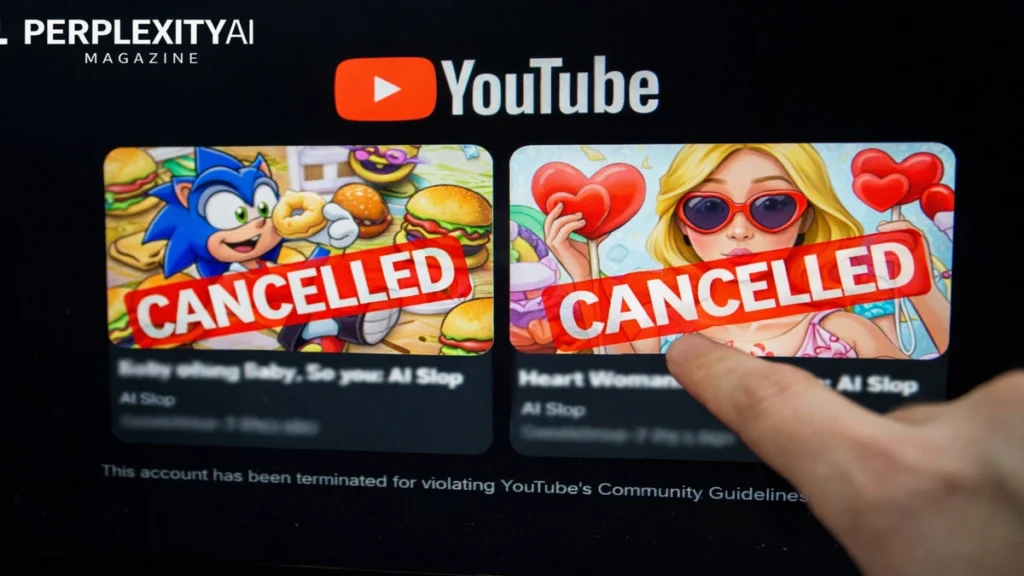

I began tracking YouTube’s quiet but decisive shift when creators started noticing something unusual in early 2026. Entire channels with millions of subscribers were vanishing overnight. Not demonetized. Not warned. Gone. The common thread was not politics or copyright but scale. These channels were built almost entirely on low effort, mass produced AI videos that flooded feeds and harvested views. – YouTube Deletes AI Slop Channels.

Within the first months of 2026, YouTube removed or wiped 16 major AI driven channels that together had more than 35 million subscribers and roughly 4.7 billion lifetime views. Many of them had been earning millions annually. The action was not tied to a new AI specific ban. Instead, YouTube enforced long standing spam and repetitive content rules with a new intensity that caught much of the creator economy off guard.

In the first 100 words, the intent becomes clear. YouTube is drawing a hard line between using AI as a tool and running content factories that overwhelm the platform. The crackdown followed internal reviews and third party research highlighting how automated video pipelines were crowding out original creators.

This article explains what AI slop is, why YouTube acted when it did, which channels were affected, and what the purge means for creators, advertisers, and viewers. It also examines how policy language written years earlier suddenly became the most powerful weapon against low quality automation.

The Meaning of AI Slop in Platform Terms

I approach the phrase AI slop not as an insult but as a technical description of content behavior. AI slop refers to videos generated at scale with minimal human input, minimal variation, and minimal editorial judgment. These videos often rely on text to video generators, synthetic narration, templated animation, and recycled story structures.

The defining characteristic is not the use of AI. It is repetition. Hundreds or thousands of videos follow the same format, pacing, thumbnail logic, and emotional beats. Titles change slightly. Characters swap names. The underlying structure remains identical. – YouTube Deletes AI Slop Channels.

For YouTube, this matters because its recommendation system rewards consistency and frequency. AI slop channels exploited that logic by producing faster than any human team could. Over time, the system began favoring quantity over craft.

Media researchers have warned that when platforms optimize only for engagement metrics, automated content will always win unless limits are enforced. In this case, YouTube relied on its existing definition of spam and inauthentic behavior to act.

Read: Robotic Libraries Explained: How Automation Is Transforming Modern Libraries

How the Crackdown Unfolded

I noticed that the enforcement did not happen all at once. In late 2025, several high volume AI channels were quietly demonetized. In some cases, warning notices cited repetitive formats or lack of original value. By January 2026, the approach escalated.

Eleven channels were fully terminated. Five others had every video removed while the channel shell remained. Together, these actions erased billions of views from the platform’s public counters.

What made this unusual was scale. YouTube had previously removed spam networks, but rarely channels with audiences comparable to major media brands. The decision signaled that subscriber count no longer provides insulation when content patterns violate platform standards.

YouTube did not announce the purge with a press release. Instead, confirmation came through creator dashboards, policy emails, and industry reporting. The silence reinforced a message. This was enforcement, not experimentation.

Channels That Defined the AI Slop Era

I want to describe the affected channels not to shame them but to illustrate how the system worked.

One major category was animated storytelling. Channels like CuentosFacianantes produced Dragon Ball inspired narratives for children using AI animation and synthetic voices. Episodes differed only in character names and plot fragments. – YouTube Deletes AI Slop Channels.

Another category focused on anthropomorphic animals. Super Cat League and similar channels generated endless loops of AI cats and dogs eating fantastical foods or entering absurd scenarios, often paired with canned laughter.

Religious and melodrama content formed a third pillar. Imperiodejesus generated serialized biblical and soap opera style stories with AI narration and visuals, publishing multiple episodes daily.

Finally, fake trailers became a massive draw. Channels mixed real film clips with AI generated characters to create concept trailers that implied official releases.

The table below summarizes the patterns.

| Content Type | Common Features | Monetization Strategy |

|---|---|---|

| Animated stories | Reused plots, AI voices | Long watch time |

| Animal adventures | Bright visuals, loops | Shorts and autoplay |

| Religious dramas | Serialized scripts | Daily uploads |

| Fake trailers | Familiar IP hooks | Click through volume |

Why YouTube Acted When It Did

I see three reasons why enforcement intensified in late 2025 and early 2026.

First, scale reached a breaking point. AI slop began occupying a significant share of recommendations, especially in Shorts feeds. Viewers complained of sameness and fatigue.

Second, advertiser pressure increased. Brands became wary of appearing next to content that felt deceptive or low quality, even when it technically followed rules.

Third, creator backlash grew louder. Human creators argued that they could not compete with channels publishing 20 or 30 videos per day through automation. – YouTube Deletes AI Slop Channels.

An industry analyst summarized it bluntly. Platforms cannot claim to support creativity while rewarding factories.

YouTube responded by clarifying that originality includes intent, transformation, and added value. Automation without those elements became a liability.

Policy Foundations That Enabled the Purge

I want to be precise here. YouTube did not invent new rules to target AI. The company enforced existing language within the YouTube Partner Program.

The core restriction targets mass produced, repetitious, or inauthentic content. This language predates generative AI. What changed was how it was interpreted.

In July 2025, YouTube updated guidance to explicitly state that templated videos, AI voiceovers on stock footage, and minor variations on the same format may be denied monetization or removed.

This clarification gave reviewers latitude to judge patterns rather than individual videos. When entire channels showed factory like behavior, enforcement followed.

The table below contrasts allowed and disallowed use.

| Allowed AI Use | Disallowed Patterns |

|---|---|

| AI assisted editing | Fully automated pipelines |

| Human commentary | Script recycling |

| Creative transformation | Minor tweaks at scale |

| Limited automation | Upload flooding |

Economic Incentives Behind AI Slop

I cannot ignore the money. Many of these channels earned millions annually through ad revenue. The cost of production was near zero once systems were built.

This created a powerful incentive loop. More uploads meant more impressions. More impressions meant more data to optimize thumbnails and titles. Over time, the content became less about storytelling and more about gaming attention.

Some operators treated channels like financial instruments rather than creative projects. When one niche slowed, they pivoted to another with the same underlying system.

YouTube’s purge disrupted that model. By removing entire libraries of content, the platform signaled that past performance would not protect future earnings. – YouTube Deletes AI Slop Channels.

Impact on Legitimate Creators

I hear mixed emotions from creators who watched the purge unfold.

Relief came first. Many reported immediate improvements in recommendation diversity. Shorts feeds became less repetitive. Discovery felt more human again.

Fear followed. Some creators who responsibly use AI worried about collateral damage. Would automation of any kind trigger penalties.

YouTube attempted to address this by emphasizing context. AI assisted content remains allowed when humans provide direction, judgment, and originality.

A media professor described the shift as necessary friction. When production becomes too easy, standards must rise.

Broader Implications for Platform Governance

I believe this moment extends beyond YouTube. It reflects how digital platforms are redefining governance in the AI era.

Rules written for spam email and click farms are now being applied to generative media. The principle is the same. Scale without authenticity erodes trust.

Other platforms are watching closely. TikTok, Meta, and streaming services face similar pressures as generative video improves. – YouTube Deletes AI Slop Channels.

The YouTube case suggests that enforcement will focus less on tools and more on behavior. How content is produced, not what produces it, becomes the standard.

Takeaways

- YouTube removed 16 major AI slop channels in early 2026

- Roughly 4.7 billion views disappeared from the platform

- Enforcement relied on existing spam and repetition rules

- AI itself was not banned, only low effort automation

- Human creators gained visibility after the purge

- Advertiser trust played a key role

- Platform governance is shifting toward pattern based enforcement

Conclusion

I see YouTube’s crackdown not as a rejection of AI but as a recalibration of values. The platform is asserting that creativity cannot be reduced to throughput alone. Tools may accelerate production, but meaning still requires human judgment.

By deleting some of its most viewed automated channels, YouTube accepted short term disruption in exchange for long term credibility. That choice carries risk. Enforcement errors can alienate creators. Overreach can stifle experimentation. – YouTube Deletes AI Slop Channels.

Yet doing nothing carried greater danger. A platform flooded with synthetic sameness loses cultural relevance. Viewers disengage. Advertisers retreat.

The message from 2026 is clear. AI belongs on YouTube, but only when guided by intent, originality, and responsibility. The era of unchecked content factories is ending. What replaces it will define online video for the next decade.

FAQs

What is AI slop on YouTube

AI slop refers to mass produced, repetitive videos generated with minimal human input and little creative variation.

Did YouTube ban AI content

No. YouTube allows AI assisted videos that include original ideas, commentary, or creative transformation.

How many channels were removed

Sixteen major channels were affected, either deleted entirely or stripped of all videos.

Why were fake trailers targeted

They reused familiar footage with minimal changes, creating misleading impressions at scale.

Will more purges happen

YouTube has indicated ongoing enforcement based on behavior patterns, not one time actions.