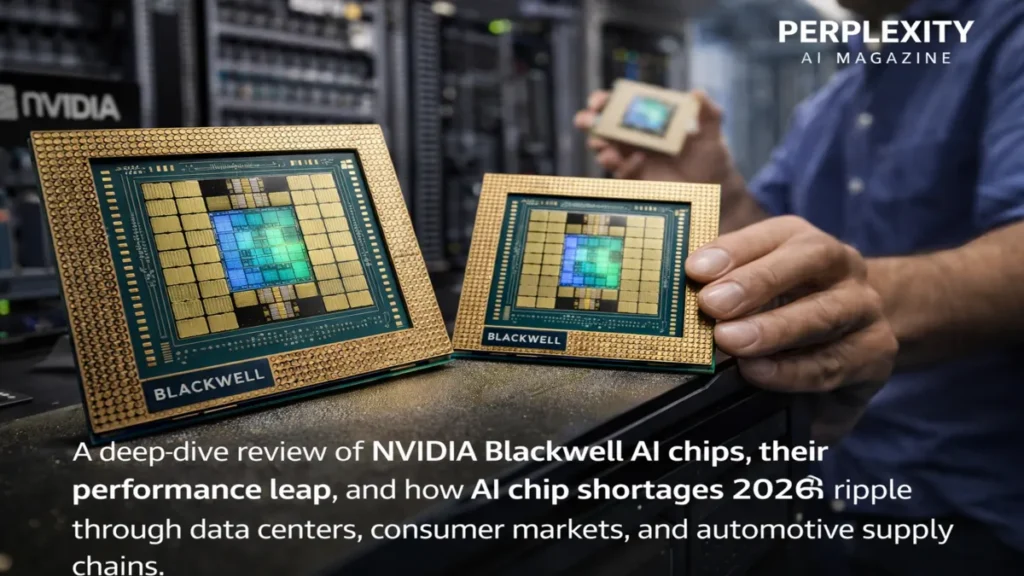

The AI industry is grappling with unprecedented supply constraints in what analysts are calling AI chip shortages 2026. At the center of this bottleneck is NVIDIA’s Blackwell architecture, launched in 2024 and iterated with Blackwell Ultra in 2025. Designed for the most demanding generative AI and reasoning workloads, these GPUs boast dual-die configurations, massive HBM3E memory, and NVFP4 precision optimized for inference and model training. Blackwell chips deliver up to 30 times faster inference than Hopper GPUs while using 25 times less power for large models, creating new benchmarks in AI performance.

Despite these breakthroughs, explosive demand from hyperscale cloud providers and AI data centers has created persistent shortages. Enterprises face delays of 6–12 months, as production struggles to keep pace, and specialized rack-scale systems like GB300 NVL72 require advanced cooling solutions, adding further deployment complexity. The scarcity impacts not only cloud infrastructure but also consumer electronics and automotive sectors, forcing reallocation of memory and DRAM components and triggering feature reductions in EVs and AI-enabled devices.

This article examines NVIDIA’s Blackwell architecture, performance metrics, the causes and consequences of AI chip shortages 2026, downstream impacts on industries, and the broader strategic outlook for AI hardware through 2026.

NVIDIA Blackwell Architecture and Ultra Iteration

The Blackwell architecture represents a transformative step in AI GPU design. With dual-die configurations such as B200 and GB200, Blackwell integrates 208 billion transistors and up to 192GB of HBM3E memory per GPU. These capabilities allow large AI models to operate efficiently with fewer memory bottlenecks. The architecture leverages NVFP4 precision for reasoning tasks and advanced attention acceleration, delivering up to two times faster softmax calculations and lower latency for long-context large language models.

Blackwell Ultra further extends these improvements with higher throughput and efficiency, enabling data centers to achieve 50 times the inference output compared to previous-generation GPUs. Power efficiency is also a key feature; GPT-4-scale training consumes 25 times less energy per operation compared with Hopper GPUs. Early reviews praised these enhancements, particularly for their ability to scale reasoning and inference across multiple devices without requiring additional power or cooling overhead beyond standard rack implementations.

Read: amason Alexa+ AI Expansion and Infrastructure Strategy

Blackwell Ultra vs Hopper Features

| Feature | Blackwell Ultra vs Hopper | Key Impact |

|---|---|---|

| NVFP4 Compute | 15 petaFLOPS (7.5× Hopper) | Faster inference on smaller models |

| Attention Acceleration | 2× faster softmax | Lower latency for long-context LLMs |

| Rack Throughput | 50× AI factory output | Scalable reasoning inference |

| Power Efficiency | 25× less for GPT-4 training | Sustainable mega-clusters |

AI Chip Shortages 2026: Causes and Dynamics

AI chip shortages 2026 are driven by the convergence of high demand for advanced AI workloads and structural supply limitations. TSMC’s production capacity for advanced nodes remains constrained, particularly for CoWoS packaging and HBM3E memory integration. As hyperscalers prioritize GB200 and GB300 GPU deployments, smaller orders are delayed, exacerbating the scarcity. Geopolitical tensions also prompt region-specific variants, especially for China, adding complexity to allocation and production planning.

Enterprise and cloud data center operators are the first to experience delays, consuming 70–80 percent of the available high-end GPU supply. Consumer electronics manufacturers and automotive OEMs face secondary impacts as memory and packaging resources are diverted to meet hyperscale requirements. These shortages are projected to persist through the first half of 2026, with limited relief expected until supply chain expansions and fab scaling are fully realized.

AI Chip Shortage Timeline by Sector

| Industry | First Impact | Key Constraint |

|---|---|---|

| Hyperscalers | January 2026 | GPU/HBM allocation |

| Consumer Electronics | February 2026 | DRAM/NAND availability |

| Automotive | March 2026 | Memory for ADAS and EV systems |

| PCs/Servers | Q2 2026 | Edge AI chip supply |

Impact on Hyperscale Cloud and Enterprise AI

Hyperscale cloud providers such as AWS, Google Cloud, and Microsoft Azure experience the earliest effects of AI chip shortages 2026. Backlogs delay AI infrastructure deployments by up to a year, despite ongoing yield improvements at TSMC. Companies prioritize high-bandwidth memory and GB200 racks, leading to deferred enterprise projects and slower deployment of AI services. These delays affect AI model training schedules, client timelines, and overall cloud compute expansion.

Rack-scale systems like GB300 NVL72 require advanced cooling, compounding integration challenges and extending the timeline for deploying Blackwell Ultra clusters. Despite these difficulties, hyperscalers retain priority allocation, ensuring they maintain leadership in AI service capabilities while downstream sectors contend with limited access.

Consumer Electronics and Automotive Pressures

The consequences of AI chip shortages 2026 extend to consumer electronics and automotive industries. Smartphones and AI PCs face 20–30 percent cost increases as HBM3E and DRAM availability are constrained. This raises the risk of a nearly 9 percent market contraction in devices dependent on high-performance AI accelerators.

In automotive, EVs and autonomous driving systems are particularly vulnerable. Memory and DRAM shortages force OEMs to reduce features such as infotainment screens, generative AI assistants, and Level 3+ ADAS to conserve chips for essential safety and control functions. Premium EVs like Tesla Model 3 have seen DRAM costs double from $200 to $400 per unit, representing up to 1 percent of the total bill of materials. De-contenting measures reduce memory requirements by up to 5.5 times for basic systems, but margins are still affected due to 60 percent price surges in memory components.

Automotive Vulnerability

| Vehicle Type | Vulnerability Level | Mitigation Risk |

|---|---|---|

| Premium EVs | High | Feature cuts, delayed launches |

| ADAS-Heavy Sedans | Medium-High | Downgraded autonomy |

| Basic ICE Cars | Low | Minimal feature reductions |

Expert Insights on Blackwell and Shortages

Industry experts emphasize the structural nature of AI chip shortages 2026:

- Lisa Su, semiconductor analyst, notes, “AI chip shortages 2026 reflect structural supply chain limits rather than cyclical demand.”

- Dr. Arjun Patel, GPU specialist, observes, “Memory and packaging bottlenecks are the true constraints, not wafer production.”

- Anne Richards, senior editor at ChipWorld Review, states, “Advanced packaging ecosystems require years to scale, so shortages will persist despite fab expansions.”

These perspectives highlight that shortages are driven by production realities rather than transient market dynamics.

Strategic Outlook and Future Architectures

Looking forward, NVIDIA plans to introduce the Rubin architecture in the second half of 2026, targeting 3.3 times the speed of Blackwell GPUs. Despite this, Blackwell remains the standard for 2026 deployments, with secure allocations managed through long-term contracts. Enterprises must navigate scarcity carefully, prioritizing high-performance racks while considering software optimization to maximize efficiency on existing hardware.

The combination of Blackwell’s architectural advances and persistent supply constraints ensures that AI chip shortages 2026 remain a defining factor for AI infrastructure, consumer electronics, and automotive production.

Takeaways

- NVIDIA Blackwell Ultra GPUs redefine inference and training performance.

- HBM3E memory and advanced packaging bottlenecks drive persistent shortages.

- Hyperscale cloud providers prioritize allocation, delaying smaller enterprise deployments.

- Consumer electronics and automotive industries face cost increases and feature reductions.

- Shortages reflect structural supply limitations rather than short-term market fluctuations.

- Rubin architecture promises higher performance but will require additional production capacity.

Conclusion

AI chip shortages 2026 illustrate the delicate balance between technological innovation and manufacturing capacity. NVIDIA’s Blackwell architecture demonstrates unprecedented GPU performance, yet its success has amplified supply constraints across industries. Hyperscale cloud providers maintain a competitive edge due to priority allocations, while consumer electronics and automotive sectors adapt through cost increases and de-contenting. Future architectures, including Rubin, promise even higher performance, but they will also demand expanded infrastructure and memory capacity. The events of 2026 underscore the critical importance of aligning innovation with scalable supply chains to meet the growing computational demands of AI.

FAQs

What are AI chip shortages 2026?

Persistent supply constraints for high-end GPUs and memory components driven by hyperscale AI demand and packaging bottlenecks.

Why is NVIDIA Blackwell important?

Blackwell delivers 30x faster inference than Hopper GPUs and supports large AI models with reduced power consumption.

How are consumers affected?

Device costs rise 20–30 percent, and AI features in PCs and smartphones may be limited.

What is the impact on automotive production?

EV and ADAS-equipped vehicles face de-contenting, delayed launches, and higher memory costs.

Will the shortage resolve soon?

Capacity expansions and new fabrication lines may ease constraints, but relief is unlikely in the first half of 2026.